Article

Nov 24, 2025

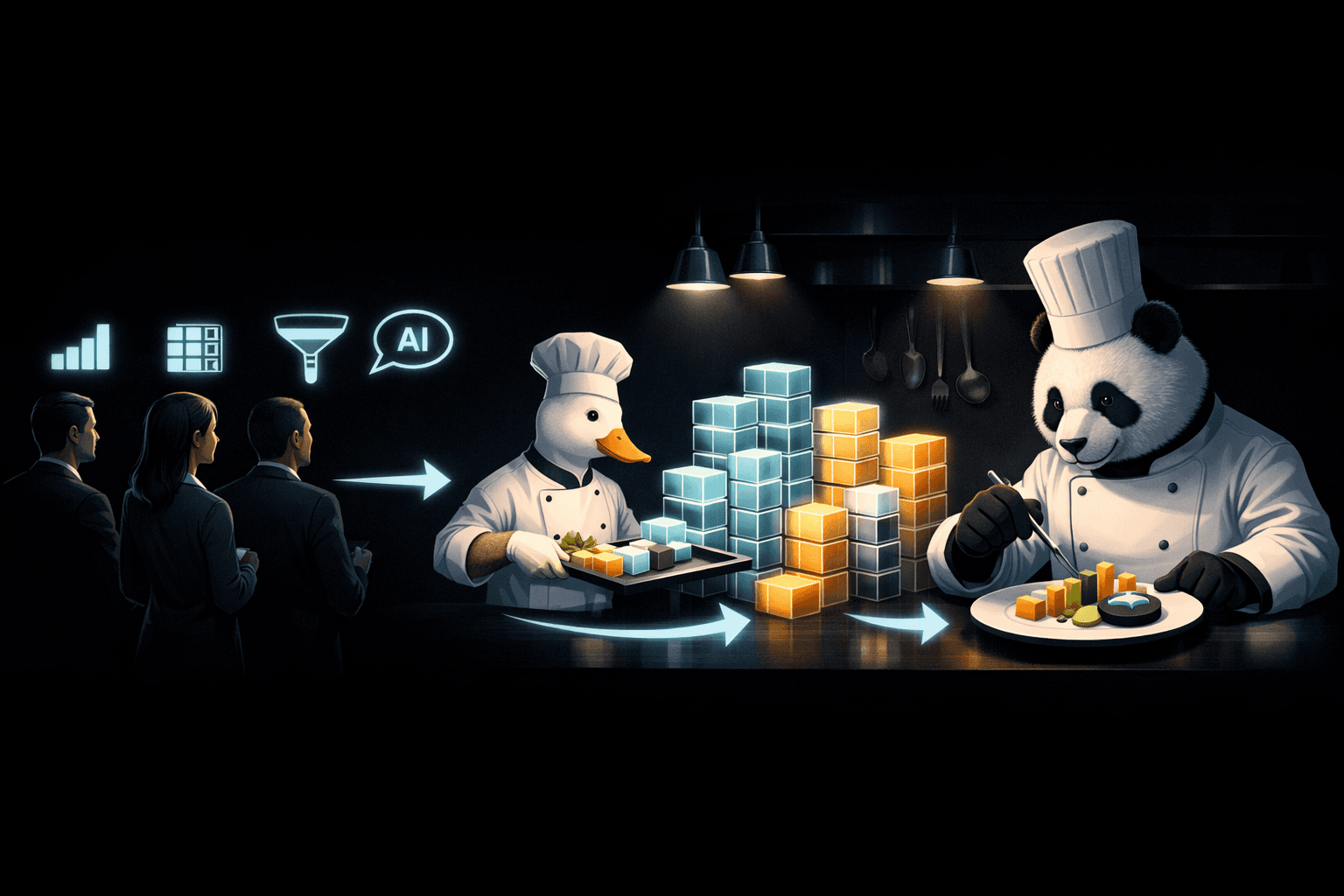

Building a data platform is like running a busy kitchen: every request is unique, complex, and expected instantly. This article shares how Pandas worked perfectly at smaller scale, but struggled as datasets and analytics demands grew. By pairing Pandas with DuckDB, heavy scanning, filtering, and aggregations moved to a faster “prep layer,” while Pandas handled flexible finishing. The result: drastically lower memory usage, near-instant queries, and 100× scalability - without rewriting systems or increasing infrastructure cost.

Running a data platform is a lot like operating a high-end restaurant kitchen. Customers place complex “orders” — specific cuts of data, seasoned with right filters, plated as beautiful representations and AI-powered summaries.

Every order is different, every customer has unique tastes.

And they all want their "meals" delivered fast.

When Pandas Ran the Kitchen

In the beginning, Pandas was our lone, incredibly talented master chef — slicing, dicing, pivoting, transforming and aggregating every single dish by hand!

For a while, it was perfect. Datasets were small enough (100,000 to less than a million and less than 50 columns), and Pandas served flawlessly. Requests like “Show me Q3 revenue by region, excluding refunds, as a pivot table” were served instantly.

When Orders Outgrew the Chef

But as our customers’ appetites grew, so did the complexity of our menu — and the size of our ingredients:

From multi-month to multi-year, multi-source analytics

From single-table cuts to multi-dimensional, organization-wide analytics

From 100,000 rows to multi-millions with hundreds of columns

From seconds of processing to minutes — while devouring every bit of memory we had

Suddenly, we weren't serving intimate dinner parties anymore, we were catering massive events.

Pandas was still cooking the same way — bringing all the ingredients to the counter and working through them one by one. What once consumed a modest amount of memory began devouring every available resource and still asking for more

Enter DuckDB — The Perfect Sous Chef

Our kitchen was delivering the dishes, but not at the speed our customers expected. We had three choices: Renovate the kitchen entirely, add more space, or get a helping hand. Any option we chose could not increase cost, in the midst of our optimization initiatives.

We chose the helping hand.

Enter DuckDB — the perfect partner for Pandas. Instead of Pandas having to slice through every ingredient, DuckDB could quickly scan through millions of records, grab exactly what we needed, and hand them over—neatly prepped.

DuckDB became our prep cook:

Reading only necessary columns

Filtering before loading anything into memory

Handling massive pivot/aggregation calculations at lightning speed

Pandas then takes the prepped data and adds the finishing transformation and aggregation touches our customers love. The handshakes were perfect and the workflows clicked!

Why It Works

DuckDB: SQL-style power on large datasets, optimized for analytics.

Pandas: Unmatched flexibility for complex data transformations and fine-tuned presentation.

Seamless Tech onboarding: No rewrite, we used existing SQL skills and Pandas — no disruption and super-fast integration

The Results

80–90% drop in memory usage for large operations

Queries that took minutes now finished in less than a second

Handle datasets 100× larger (rows X columns) without extra infrastructure cost

Customers kept all the features they loved — just faster and more efficient

The Bigger Lesson

Innovation isn’t always about replacing old tools. Sometimes it’s about pairing them smartly.

By letting DuckDB and Pandas play to their strengths, we unlocked speed, scalability, and new analytical possibilities — without sacrificing flexibility.

Our customers don’t see this complexity. They just get speed, reliability, and expected outputs

Behind the scenes, the magic comes from a perfect kitchen partnership. And yes — our story includes a duck.